“Think of MCP like USB-C for AI, a universal connector that replaces the mess of one-off integrations with a clean, open protocol for working across your entire ecosystem.”

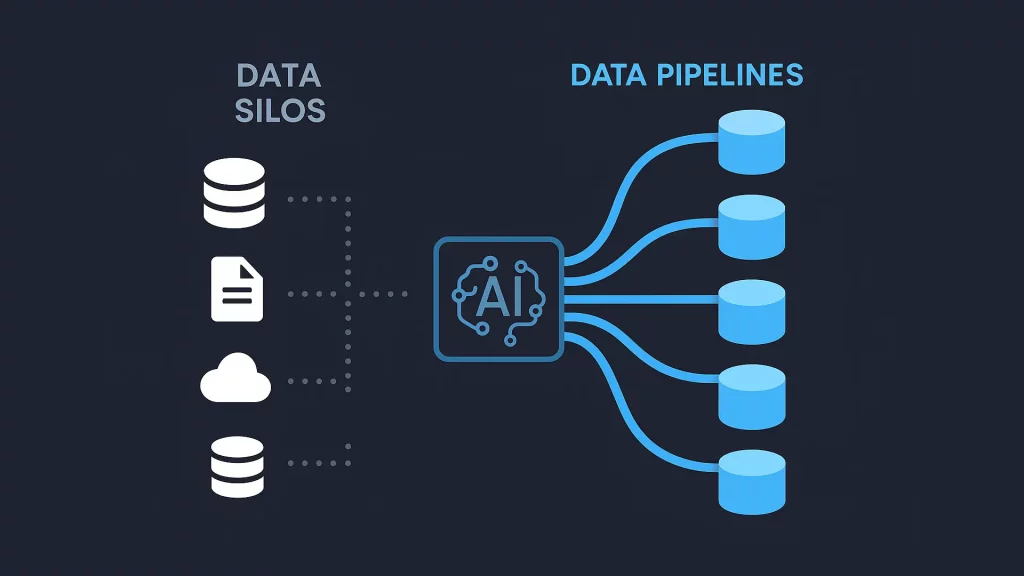

Modern AI systems are impressive, but here’s the hard truth: they’re often stuck behind data silos, disconnected tools, and custom integrations that make real-world deployments a nightmare.

I have lost track of how often I’ve had to manually lift content from one platform just to drop it into tools like Claude or ChatGPT. Skipping that step? It usually means building yet another custom integration for every single data source. It’s inefficient at best and a serious barrier to innovation at worst.

Enter: Model Context Protocol (MCP)

MCP, short for Model Context Protocol, is a game-changing open standard developed by Anthropic that makes it radically easier to plug LLMs into real tools, apps, and data. Rather than relying on fragmented, custom-built integrations, MCP offers a unified, streamlined approach that eliminates data silos and simplifies the connection between AI systems and enterprise ecosystems—unlocking more relevant, scalable, and high-quality outcomes.

Think of MCP like USB-C for AI, a universal connector that replaces the mess of one-off integrations with a clean, open protocol for working across your entire ecosystem.

What Makes Up The MCP?

The Model Context Protocol (MCP) architecture consists of four key components that work together to bridge AI models with real-world data and tools:

1. Host Application

This is the interface where users interact with large language models (LLMs). It initiates connections and passes user inputs to the AI. Examples include Claude Desktop, AI-powered IDEs like Cursor, and standard web-based chat interfaces.

2. MCP Client

Embedded within the host application, the MCP client manages communication between the host and the MCP servers. It translates user requests into the MCP format and relays responses back to the LLM. For instance, Claude Desktop includes a built-in MCP client to streamline this process.

3. MCP Server

The MCP server provides specific capabilities for data access. It acts as a bridge between AI and external systems, exposing functions through the protocol. Each server typically integrates with a particular system, like GitHub for code repositories or PostgreSQL for databases, adding contextual knowledge that the AI can use.

4. Transport Layer

This layer handles communication between the client and the server. MCP supports two transport methods:

- STDIO (Standard Input/Output): Ideal for local, same-environment integrations.

- HTTP + SSE (Server-Sent Events): Used for remote setups, allowing asynchronous streaming of responses.

All communication within MCP follows the JSON-RPC 2.0 format, ensuring consistency in how requests, responses, and notifications are structured and exchanged.

Under the Hood: How MCP Works

To better understand the power of MCP, let’s walk through how it functions behind the scenes, step by step. From establishing connections to retrieving real-time data, each phase ensures AI can access the most relevant context from your tools and systems, securely and seamlessly.

Step #1: Protocol Handshake

- Initial connection: When an AI client (such as Claude Desktop) launches, it immediately connects to any configured MCP servers available on the system.

- Capability Discovery: The client queries each server with a simple question: “What functions do you support?” In response, the servers return a list of their available tools—like the ability to fetch GitHub issues, read documents, or run database queries.

- Capability Registration: The client then registers these capabilities, making them accessible to the AI model during user interactions. This allows the AI to dynamically call on these tools whenever they’re needed in the conversation.

Now, after step#1, let’s say you ask Claude, “What’s the weather like in Delhi today?” Here’s what happens:

Step #2: Need recognition

Claude analyzes your question and recognizes it needs external, real-time information that wasn’t in its training data.

Step #3: Tool or resource selection

Claude identifies that it needs to use an MCP capability to fulfill your request.

Step #4: Permission request

The client displays a permission prompt asking if you want to allow access to the external tool or resource.

Step #5: Information exchange

Once approved, the client sends a request to the appropriate MCP server using the standardized protocol format.

Step #6: External processing

The MCP server processes the request, performing whatever action is needed—querying a weather service, reading a file, or accessing a database.

Step #7: Result return

The server returns the requested information to the client in a standardized format.

Step #8: Context integration

Claude receives this information and incorporates it into its understanding of the conversation.

Step #9: Response generation

Claude generates a response that includes the external information, providing you with an answer based on current data.

Ideally, this entire process happens in seconds, creating an unobtrusive experience where Claude appears to “know” information it couldn’t possibly have from its training data alone.

Security You Can Trust

MCP takes security seriously. It includes:

1. OAuth 2.1-based authorization with PKCE

Developers need to be aware of open redirect vulnerabilities and secure tokens properly. They should also implement PKCE for all authorization code flows, as MCP’s OAuth implementation that uses HTTP+SSE transport servers has the same risks as standard OAuth flows.

2. Securing MCP with OAuth Best Practices

The core concerns remain unchanged as the current MCP authorization specification incorporates a subset of OAuth 2.1. Familiarity with common OAuth misconfigurations can help developers avoid security gaps.

- Principle of Least Privilege – only access what’s necessary

Server developers should strictly follow the principle of least privilege, and only request the minimum access necessary for functionality. This ensures servers aren’t accidentally exposed to sensitive data and strengthens resilience against supply chain attacks that leverage unsecured connections between different resources.

- Human-in-the-loop design to prevent automation abuse

Human-in-the-loop design is key to protecting MCP server users. This places an important checkpoint against automated exploits as clients must request explicit permission from users before accessing tools or resources. However, this protection depends on clear permission prompts that help users make informed decisions and have a transparent understanding of the proposed scopes.

By following industry best practices, developers avoid common misconfigurations and ensure every connection is safe, private, and auditable.

Why MCP Matters for the Future of AI

AI isn’t just about generating text—it’s about interacting with your world. From automating customer support to powering technical diagnostics, AI systems need reliable, secure, and scalable ways to work with your data and tools.

With Model Context Protocol, developers and enterprises can:

- Build AI agents that actually do things

- Avoid writing dozens of custom APIs and wrappers

- Move faster without compromising on safety or scale

Final Thoughts

Model Context Protocol is more than just a technical convenience—it’s a foundation for the next generation of AI applications. By simplifying integration and unlocking real-time context, MCP helps AI become truly useful, adaptable, and enterprise-ready.

If you’re building or deploying AI solutions, it’s time to ditch the patchwork and plug into the future with MCP.